What problem are we solving

Many agent failures are not caused by weak prompts or undertrained models, but by poor environments. The most capable agent can still fail if the world it operates in is chaotic, underspecified, or unsafe.

A common pattern of failure is when tools are designed without clear feedback. If a function returns only a generic error, the agent wastes cycles trying to debug instead of executing the user’s task. Another example is an NL2SQL agent with access to every table in a database — it spends more time exploring irrelevant data than answering the query. In both cases, the problem is environmental, not cognitive.

In a well-designed environment, agents are empowered with tools to gather additional context or information on their own. As agents and LLMs become more powerful, we will transition from proactive to reactive context construction — building environments that let agents pull the right data when needed instead of overloading them with static inputs.

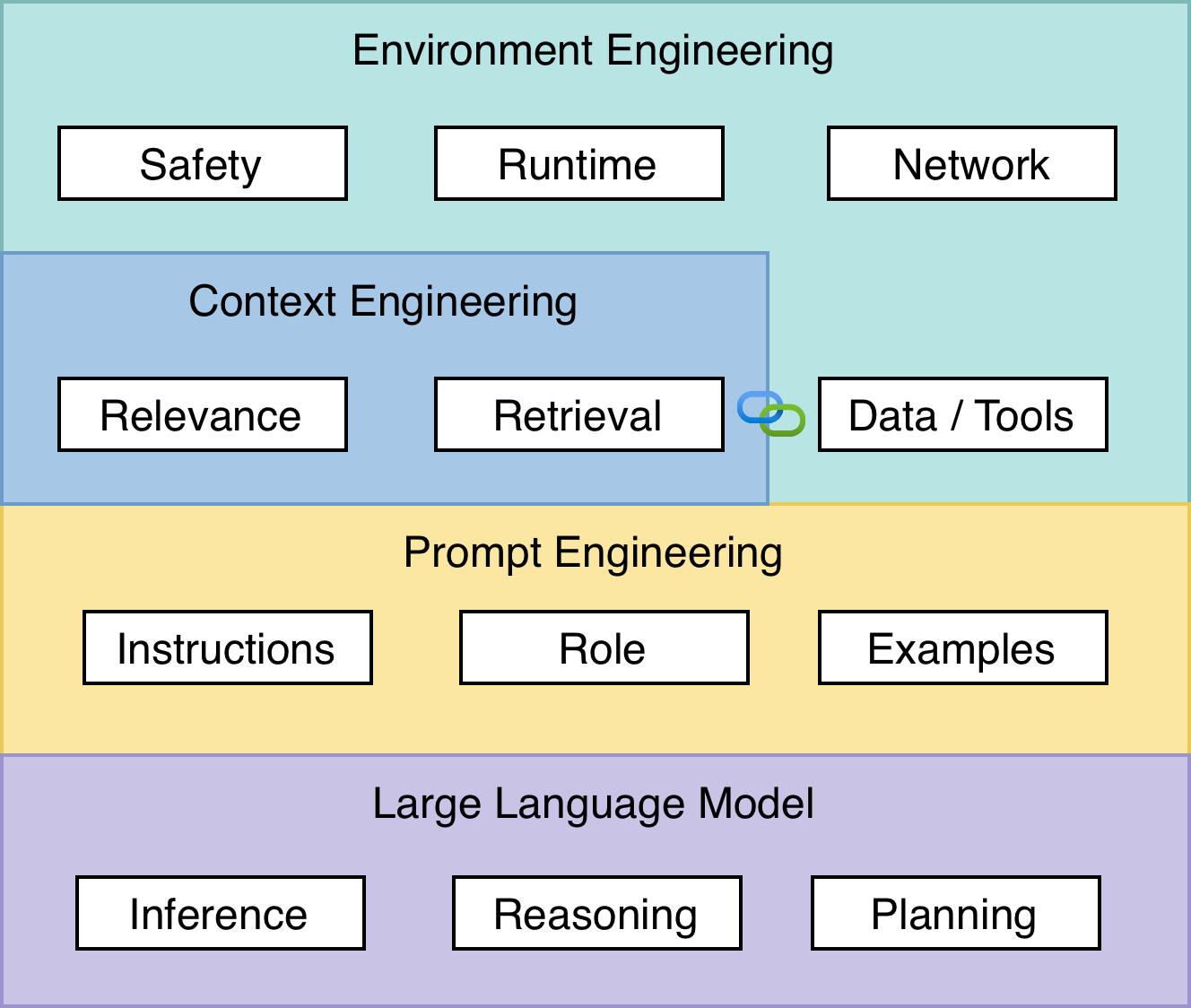

The three layers of agent design

Prompt engineering

You shape the instructions the model reads so it knows what to do and how to answer. You use roles, examples, formatting, and checks for clarity. Goal: consistent responses from a single model call.

Context engineering

You decide what extra information enters the context window at the right time. You add retrieval, memory, and results from tools so the model has the facts it needs. Goal: surface the right knowledge across many turns.

Environment engineering

You design the world around the agent — runtime, tools, resources, networks, credentials, feedback, logging, and approval paths. Goal: ensure robust, predictable, and verifiable agent behavior within defined operational boundaries.

Think of it as platform engineering applied to agents.

What is environment engineering

Environment engineering is the deliberate setup of everything the agent can see and do. It combines software design, operations discipline, and system safety into a single practice.

- Tools and actions: Decide which tools exist, which are allowed, and which are blocked. Provide clear, model-friendly descriptions.

- Resources and data: Expose read-only resources the agent can query without side effects. If an action has potential side effects or compute implications, make that explicit in the tool description so the agent understands cost, latency, or impact.

- Runtime and network: Run inside a container or sandbox. Version the environment (for example, package versions and system dependencies) alongside the agent code. Use allow lists for outbound calls and web access.

- Auth: Assign a dedicated identity to the agent and follow a least-privilege approach. The agent should only have the permissions required for its specific function.

- Human oversight: Add human review for high-risk or irreversible actions. Agents can plan or draft, but people approve and execute.

- Feedback and observability: Return actionable errors from tools. Both the agent and its environment are responsible for observability — tools, connectors, and APIs must log, trace, and surface their behavior.

- Environmental grounding: Agent environments that are grounded in their operational context consistently produce higher-quality results. Avoid assumptions about what an agent knows. Teach it about its surroundings through the system prompt. Do not rely on an LLM’s internal biases or defaults. Every expected action and boundary should be codified in the environment itself. The less the agent has to infer, the more consistent and predictable its behavior becomes.

Environment engineering for AI agents is inherently multidisciplinary. It merges principles from software engineering, data science, linguistics, and DevOps. Like platform engineering, its goal is to create an opinionated, productive, and safe environment where agents can act effectively while minimizing exploration and error.

Practical examples

1. Define safe tool boundaries (GitHub Copilot)

In GitHub Copilot, users define which Model Context Protocol (MCP) servers the agent can connect to and which tools are available. This configuration controls reach and behavior.

{

"mcpServers": {

"github": {

"url": "https://example-gateway/tools/github",

"tools": [

"list_pull_requests",

"create_branch"

]

}

}

}

By declaring tools explicitly, users give the agent clear operational limits — reducing risk and uncertainty while retaining flexibility.

2. Create micro-environments for different workflows (GitHub Copilot)

GitHub Copilot also allows developers to define custom chat modes, micro-environments for specific workflows. For example, a planning mode can read code but not modify it:

---

description: "Generate an implementation plan for new features or refactoring existing code."

tools: ['fetch', 'githubRepo', 'search', 'usages']

model: Claude Sonnet 4

---

# Planning mode instructions

You are in planning mode. Your task is to generate an implementation plan for a new feature or for refactoring existing code.

Do not make any code edits — only output a plan.

The plan should include:

- Overview

- Requirements

- Implementation Steps

- Testing

---

When this mode is active, Copilot can explore the workspace, identify affected files, and propose steps — but cannot modify files. This separation between planning and execution keeps actions reviewable and safe.

3. Control live web access (Azure AI Agent Service)

In Azure AI Agent Service, you can restrict what online sources an agent can read from by using the Bing Custom Search grounding tool. When setting it up, you define a list of websites that are allowed for retrieval. For example, you might only permit content from trusted Microsoft and GitHub domains such as: • learn.microsoft.com • github.blog • code.visualstudio.com

This configuration ensures that the agent can access only approved documentation sites, which reduces the risk of inaccurate or unsafe data being pulled from the wider web. It also helps make results reproducible since every search stays within a curated set of sources.

Summary

Prompt engineering shapes what a model should do. Context engineering ensures it knows what it needs to know. Environment engineering establishes the operational boundaries under which intelligent behavior can safely emerge.

We are still at the early stages of this discipline. As LLMs grow more autonomous, the focus will shift away from manually crafting prompts and toward designing environments that support intelligent, reliable, and self-directed agents. Environment engineering will become the foundation of how we achieve trustworthy autonomy at scale.

References

Agent Factory: Top 5 agent observability best practices for reliable AI – Yina Arenas, Microsoft Azure Blog (Agent Factory series). Outlines how agent observability (tracing reasoning steps, metrics, alerts, and integration with Azure Monitor) enables visibility and human oversight across an AI agent’s lifecycle.

Grounding with Bing Custom Search (preview) – Microsoft Learn Documentation (Azure AI Foundry). Describes the Grounding with Bing Custom Search tool, which confines an AI agent’s web access to a configurable set of allowed domains, ensuring retrieval-augmented generation is restricted to trusted web sources.

Unlocking new AI workloads in Azure Container Apps – Cary Chai, Microsoft Tech Community (May 2025). Highlights secure, containerized execution for AI agents, including Azure Container Apps “dynamic sessions” that run untrusted AI-generated code in isolated sandboxes protected by Hyper-V for runtime safety.

What is Azure AI Foundry Agent Service? – Microsoft Learn Documentation (Sept 2025). Overview of Azure AI Agent Service as the managed runtime that orchestrates tool calls, enforces content safety policies, and integrates with identity, networking, and observability systems to ensure AI agents are secure, governable, and scalable in production.